What have you been doing this weekend? I have been slacking (don't tell my boss). Most people in IT have heard of

Slack by now. If not, you should check it out.'

Slack is normally used for team collaboration, but I wanted to see if I could "

be less busy" in my privatelife too. Just too many apps I cycle between to check if something is new; Feedly, YouTube, Facebook, LinkedIn, Huffingtong Post, Twitter to name a few.

For a private user you will most likely stay in the free tier. This means that you are limited to 10 apps/integrations. But as you will learn, you can get a long way with just a few apps.

So what I have done so far is create some channels.

- general (default, can't leave it)

- message

- news

- reminders

- social

- todo

- youtube

The idea is to put stuff like email and other direct messages into the message channel. News is for, well news. Reminders is linked to my gmail calendar where I keep my private appointments and appointments for work that goes outside normal work hours. Social is for stuff like Facebook and Twitter. Todo is meant for keeping a todo-list, and finally the youtube channel is for my YouTube channel subscriptions.

Each channel will highligh if there is something new in them, and since I am the sole member on the team, I know it is an app or bot that has updated the channel.

So far I have been able to link my Gmail to the

message channel using

ifttt.com. This is one way, meaning I get notified of new email and can see the body of the email. I also would like my iMessages in there, but I have yet to find an integration that can do this. There are other integrations that I have not tried yet (I just dont use alot of messaging apps):

- Skype

- Facebook Messenger (LinkedIn messaging, etc.)

Facebook Messenger is not something I use alot, but sometimes. There is an integration through

Smooch, but that seems to be limited to Facebook Pages (makes sense in a business perspective).

Zapier (has loads of advanced Slack integrations) and looks also to target Pages.

It basically allows you to get messages send to a Facebook Page into Slack. And reply from Slack also.

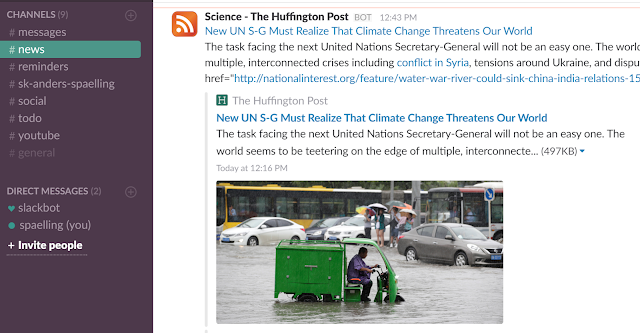

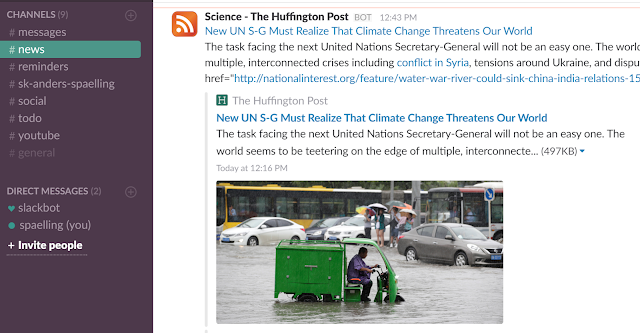

For the news channel I have lots of RSS feeds. That is simply using an RSS app (there are 3 or so of those) and then adding configurations for each feed.

|

| Some science news from Huffington Post |

Now a little trick that I learned; There was no RSS feed for

I fucking love science, so I resorted to

https://twitrss.me/ as all (?) new articles are tweeted from the

iflscience user. RSS feeds can sometimes be the answer when wanting to integrate something with Slack.

I have also connected

slalert to the news channel. That is a more generic way to get news. I have yet to get something from Slalert, but then I have not put alot of keywords in it yet. It simply connects to a Slack webhook and whenever it finds something on the keywords you have given Slalert it will post it to the channel the webook is connected to.

The reminders channel is currently linked to my Gmail calendar (there is an app called Google Calendar - doesn't get much easier than that). You can choose which of your calendars to sync to Slack and then how far in advance to remind you of an event.

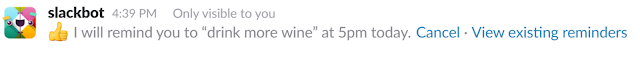

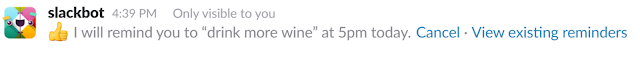

You can also just tell the slackbot to remind you or someone else (or even a channel) of something.

|

| Gotta keep hydrated |

|

| Resisting temptation to remind me again in 15 minutes :D |

The social channel was supposed to cover me in Facebook, Twitter, and other social networks. It would be nice to be able to get the Facebook news feed in Slack, but Facebook closed down that part of the API in 2015. I guess it allowed people to get the essence of Facebook on a different platform than Facebook, removing their main source of income (advertisements).

I guess the story will be much the same, now or in the future, for other social networks, so I will not even try.

The todo channel was to function as a todo-list. I can use something like Slack posts (a file of sorts) and star them. Or pin them to the todo channel. It is possible to pin all kinds of items.

The youtube channel is for my YouTube subscriptions. I can get the feed of each subscribed YouTube channel and add them to my RSS app. I could not find a way to programatically add all of the subscribed channels, so abit of manual work was needed. And when I subscribe to a new channel I will have to add it here. I guess it is a matter of time before someone writes a YouTube app for Slack.

So have I really slackified my life? Not quite. But it is a step in the right direction. Slack is still pretty new, and as apps are developed specifically for some of the things I have had to hack abit to get working, things will get better and more feature-rich.

The focus is obviously on features that can be used by actual teams (and not the solo-slacker). And still, limited by what APIs are offered by the provider in the other end. They will not be willing to just fully let go and have users leave their platform entirely.